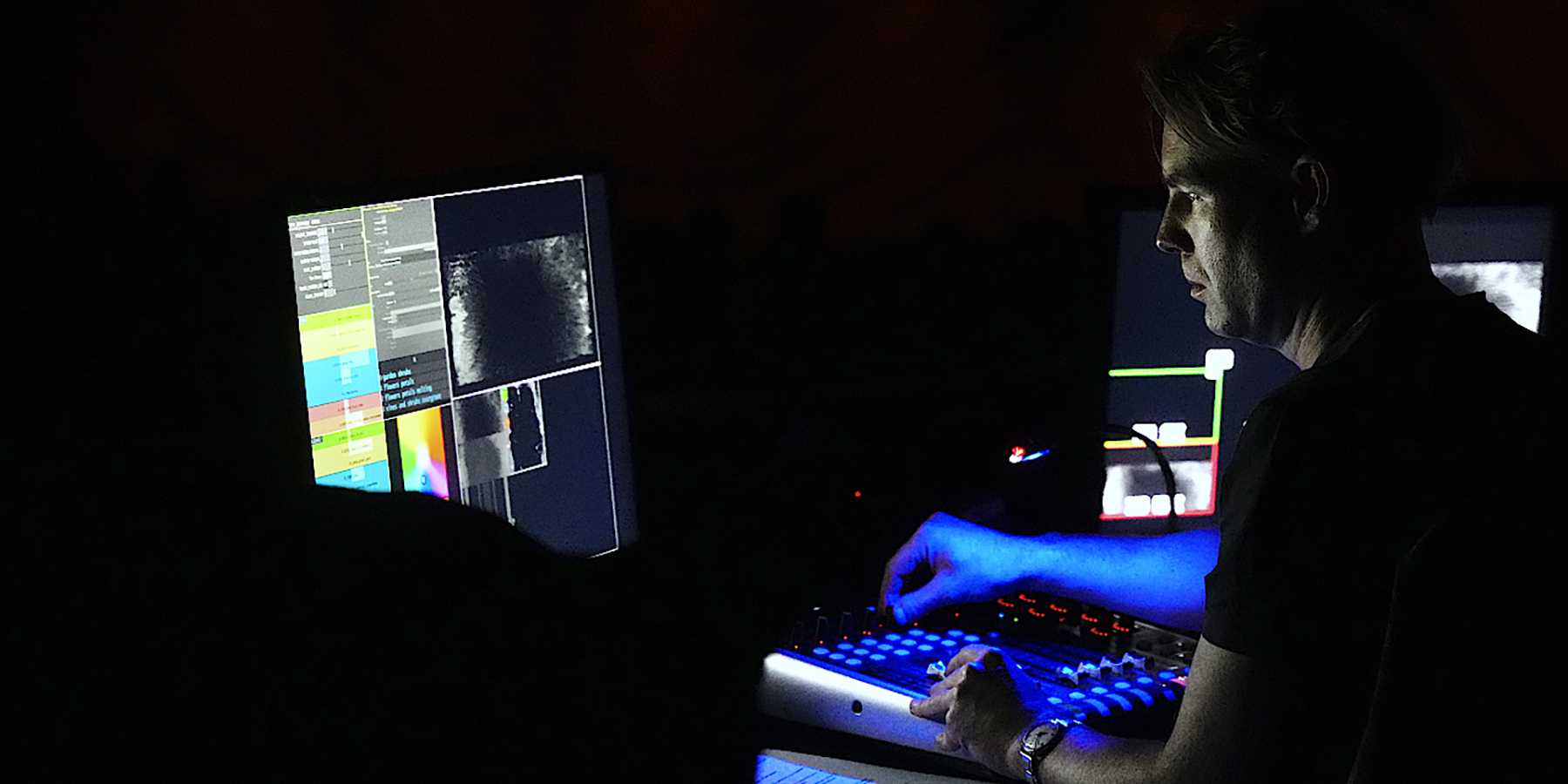

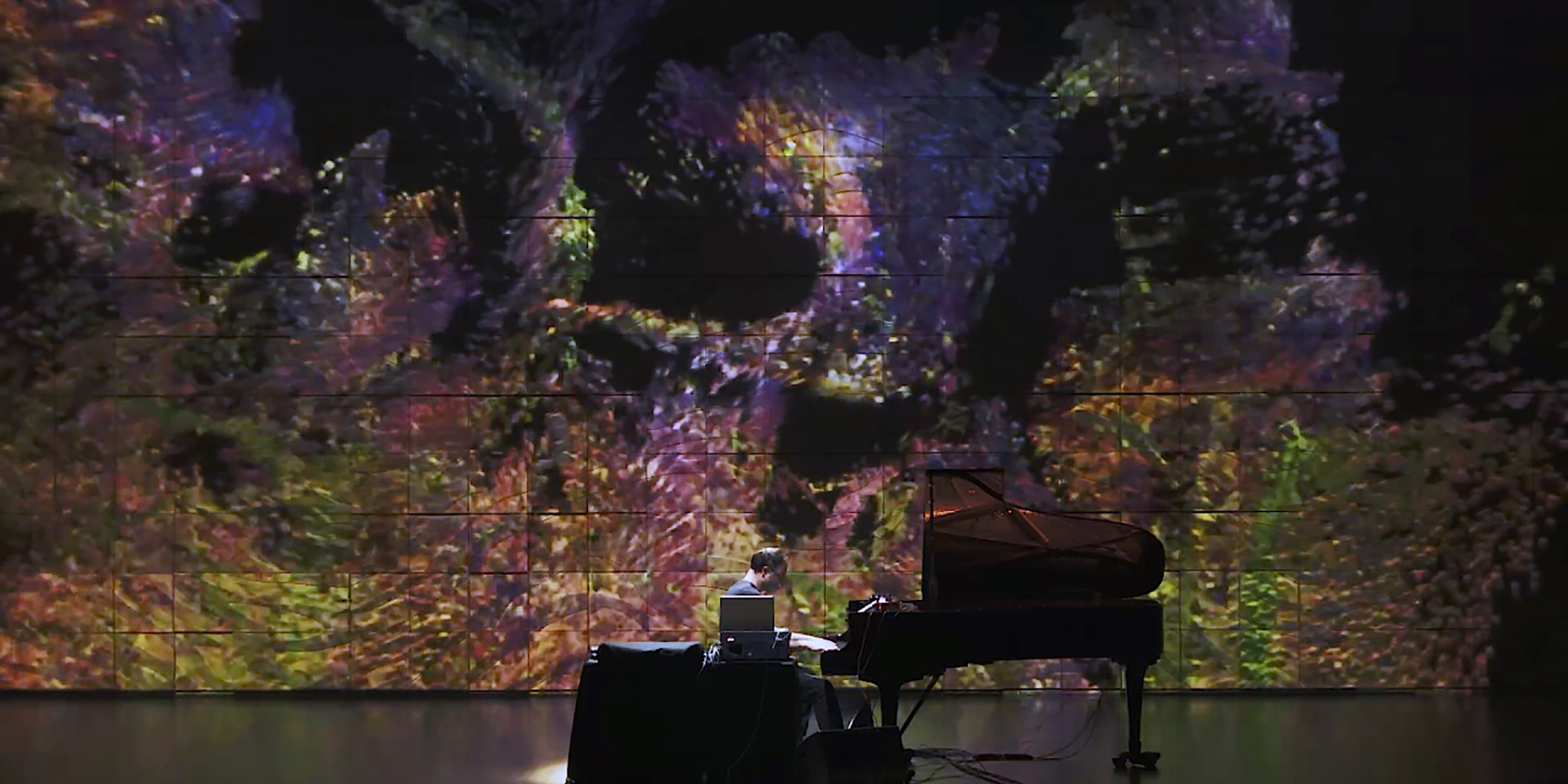

Given we were experimenting with the introduction of artificial intelligence into an already technical and bespoke procedural art pipeline, the design process for this project was deliberately exploratory and iterative. Our familiarity with the venue helped shape the creative direction. Having previously 3D-mapped Elisabeth Murdoch Hall, we were attuned to the space, particularly its iconic wood-paneled interior designed by ARM Architecture. Rather than treating it as a limitation, we embraced it as both a constraint and a creative canvas for our projection-mapped content. Despite the experimental nature of the project, the design process was still grounded in a fairly structured method. Initially Simon developed early visual sketches and procedural systems using TouchDesigner, testing and refining ideas in response to the music. Some visual systems evolved over time; others were scrapped entirely. Each system was built with controllable parameters, enabling Simon to manipulate the designs live via a DMX console. This ensured spontaneity and unpredictability, so no two performances would ever be the same. Shared audio-reactive systems and modular visual components enabled consistent interpretation of musical dynamics across the performance visuals.

A key part of the design process was the integration of a Stable Diffusion model into the real-time pipeline. As an open-source text-to-image AI, Stable Diffusion allowed Simon to generate stylised visuals on the fly. To ensure consistency and avoid unwanted or jarring visual outputs, the AI was carefully constrained using curated prompts and visual guidance mostly focused on painterly and geometric styles. Given it was taking guidance from the Simons designs and prompts - the result felt like a live conversation, an interplay between crafted digital design and generative AI - occurring simultaneously. Our approach produced a suite of ten distinct real-time digital artworks, each one designed to enhance and respond to the emotional landscape of Luke Howard’s compositions.